Automated Infrastructure Maintenance - Drone Inspections with Computer Vision

This page is available in:

Infrastructure maintenance holds enormous potential for the use of artificial intelligence. The innovation sandbox pilot project with IBM Research and pixmap gmbh at Dübendorf military airfield shows how AI-supported inspections with drones can be used for automated damage detection.

Introduction

Infrastructure maintenance of roads, bridges and dams offers great potential for use of artificial intelligence (AI). AI-based image recognition can systematically and automatically detect the tiniest of cracks or defects. Infrastructure operators still largely carry out inspections manually. Within the scope of the Innovation Sandbox for Artificial Intelligence (AI), IBM Research and pixmap gmbh implemented a pilot project on the Dubendorf Air Base, to assess the potential of AI-based inspections. In the project, a drone created high-quality imagery of the runway using AI to automatically detect any defects. The project findings are being employed to advance the use of AI for inspection and maintenance of further infrastructure elements. The imagery is being made available to other innovation stakeholders. By way of this project, the collaboration between public administration, military, research and private industry is contributing to the further development of the Zurich Metropolitan Area as an international hub for AI.

1) Challenges of manual inspections

The maintenance and inspection of infrastructure elements, such as roads, bridges and dams, is of immense significance for public safety and for maintaining economic activity. That being said, infrastructure operators are faced with a massive and complex task: Switzerland’s road network is more than 84,000 km long.1 More than 40,000 bridges span gorges, valleys and rivers.2 With over 200 major dams and thousands of small-scale ones, Switzerland is home to more dams than any other country in the world.3 This raises the question as to how such a vast quantity of infrastructure elements can be monitored efficiently. Numerous inspection processes are based on manual surveys of cracks, defects and other irregularities in infrastructure. This leads to a series of challenges ranging from lack of efficiency to human error. The potential offered by AI automation has not yet been tapped. In chapter one of this document, the four core challenges of manual inspections will be explored, followed by an elucidation of the potential of automated inspection through image recognition.

Lack of efficiency

Manual inspections require that a person physically inspect the entire surface of an infrastructure, e.g. runways or road sections. As a result, manual inspections not only consume a great deal of time and human resources, but also tend to make maintenance cycles longer than they should be. The process of inspecting infrastructure elements by physically walking or driving across them is the cause of delays which could be avoided with the help of automated systems. Manual inspections also tend to be very costly.

Poor documentation

Whereas manual inspections do provide data on defects and issues, they often lag behind what is technically possible today. In many cases, instead of a comprehensive digital image (digital twin) that continually documents the condition of an infrastructure over a longer period, a list of problematic areas or defects is compiled. The location of the defects is often only roughly indicated, making it harder to fix the problem. The absence of a digital model with automated recognition also means that data is often not integrated into operating systems and thus cannot serve as a basis for decision-making.

Human error

Humans make mistakes which can be due to concentration problems, especially in the context of repetitive and tedious tasks, such as inspecting long stretches of roads. Furthermore, there is the issue of consistency: what may qualify as a defect to one person may be deemed insignificant by another. Unrecorded defects or mix-ups can lead to expensive repairs or even infrastructure risks.

Safety hazard

Manual inspections often harbour risks for inspection staff, especially when tasks involve working in exposed locations, such as in the vicinity of high-voltage lines or in high places. Accidents occur every year as a result of manual inspections. Whereas safety equipment and training can help to reduce risks, the question remains as to whether it makes sense to expose people to such risks, seeing that technological alternatives are available.

Potential for automated inspections

The mentioned challenges point to the major potential of automated inspections. The progress made in image recognition technology is paving the way to new opportunities to overcome some of these challenges and to significantly increase efficiency and precision of inspection processes. In this project, the stakeholders from public administration, research and industry joined forces to contribute to automated infrastructure maintenance. IBM Research submitted a project proposal to the Innovation Sandbox for AI in spring 2022. The idea was to make use of the experience gained from previous projects with AI-based bridge inspections and to expand this knowledge to airport runways. The Innovation Sandbox for AI supported this innovation initiative through providing high-quality image data and by engaging the Dubendorf Air Base as a project partner from the innovation ecosystem of the Zurich Metropolitan Area. The creation of imagery was done in collaboration with pixmap gmbh, a company specialised in conducting inspections and surveys with drones and flying robots.

This report is divided into the following sections: chapter two describes the process and insights gained from the drone missions carried out by pixmap gmbh. Chapter three gives an overview of the automated evaluation of the imagery using IBM Research image recognition technology. A conclusion is drawn in chapter four and several potential areas of action shown as to how to advance automated inspections of infrastructure elements in the Zurich Metropolitan Area. A description of the technical details of image recognition by IBM Research is provided in the appendix.

II. Use of drones to create imagery

Comprehensive imagery is the bedrock for automated detection of cracks and defects in infrastructure elements. There are various methods and sources from where such data can be drawn. In some cases, use of freely accessible satellite images will prove sufficient, e.g. when damage to infrastructure is clearly visible at low resolution. However, if high-resolution imagery is needed for an in-depth inspection, there is no way around a more targeted image capturing. Images can be captured from a ground vehicle or from the air, e.g. with a drone. In the case at hand, pixmap gmbh used drones. Drones have the considerable advantage of being able to produce systematic images that are accurate down to the last centimetre, and that are repeatable and in high resolution. Furthermore, drones are easy to transport and can be operated at relatively low cost.

The Dubendorf Air Base put a more than 2.8 km long runway at the disposal of the pilot project. The airport operator defined a representative runway section of 200x40m. pixmap gmbh took pictures of the area in the required maximum quality by means of a drone, and subsequently made the data available to IBM Research for analysing. The very high resolution made it possible for IBM’s AI team to evaluate whether less high-resolution imagery would have been sufficient for an automated analysis of the cracks and defects. This is especially relevant in view of the operational use of automated inspections, e.g. if optimum quality is too costly or if data collection were to prove too time-consuming for regular maintenance work.

In the given context, planning the use of drones meant taking several challenges into account.

- Regulations – depending on the mission and location, there are regulatory requirements applicable to the use of drones. With the adoption of the drone regulations of EU/EASA on 1 January 2023, statutory provisions are even stricter now. Drone operators must substantiate with an operating licence that risks for third parties on the ground (Ground Risks) as well as collisions with other aircraft (Air Risks) can, with all likelihood, be avoided. In the present case, the runway was closed and the flight altitude of the drone was limited to maximum 10 m, so that other airfield operations with helicopters were not affected.

- Drone/camera requirements – a high-quality camera on a drone with precision GPS is required for sub-millimetre resolution images. Modern drones can be equipped with full-frame cameras (36x24 mm sensor size) and resolutions of 40 to 100 MP. It is also important to have a camera with short shutter speeds so as to avoid motion blurs, and to have a short trigger interval, in the present case 0.7s. To be able to steer the drone with accuracy down to the centimetre across the runway, it has to be equipped with a special GPS system (RTK-GNSS) and, furthermore, be able to fly autonomously according to pre-defined waypoints.

- Dependency on weather – note should be taken that these sorts of missions are weather-dependent. The runway must be dry, the drone must not cast any shadow on the captured surface, and strong gusts of wind need to be avoided. Therefore, reserve dates/times are important when planning drone footage.

In the project at hand, pixmap gmbh was able to implement the drone missions as planned on 13 May 2023.

For the sake of achieving a data basis that is as broad as possible, the main mission was slightly extended i.e. three different missions with varying collection parameters were flown.

Comparison of the three drone missions

| Comparison | Mission 1 (M1) | Mission 2 (M2) | Mission 3 (M3) |

|---|---|---|---|

| Objective | Best resolution | Scalability to entire runway |

Mapping/ photogrammetry |

| Resolution | 0,25 mm | 0,75 mm | 0,60 mm |

| Flight speed | 0,7 m/s | 4,2 m/s | 1,1 m/s |

| Flight time | 120 min | 10 min | 60 min für ca. ⅓ |

| Number of images taken | ~11 500 | ~1200 | 1 orthophoto |

Mission 1 – a new resolution level

The typical resolution of drone images in the survey area is between 1cm and 3cm. However, this particular mission went far beyond that. The required maximum resolution of 0.25 mm vis-à-vis 1 cm corresponds to a factor of 40 or 1,600 times more pixel points per unit area. This led to extreme flight parameters: equipped with a high-performance camera, the drone had to operate at a flight altitude of just 3 m. A very slow flight speed of 0.7 m/s and a picture taken every 0.7 s were needed to record slightly overlapping images and to avoid motion blur. This resulted in very long flight times totalling 2 hours with approximately 11,500 pictures taken.

Mission 2 – lower resolution as an alternative

Whereas the focus of mission 1 (M1) was on maximum resolution, mission two (M2) centred on scalability, hence, on the option to later be able to scan and capture an entire runway.

A slightly lower resolution of 0.75 mm allowed for a ten-fold faster capturing time, with a correspondingly smaller data volume.

Mission 3 – focus on mapping

The aim of this mission (M3) was to create an even more comprehensive overall image of the runway using photogrammetry. For this purpose, all of the individual images were converted into one, georeferenced orthophoto and a digital elevation model using a special software (Pix4Dmapper). This is how, in lieu of thousands of individual pictures, a single, undistorted overall image was achieved that could then be further analysed. Photogrammetric capturing is, however, only possible with significantly higher overlaps of the individual images, which, in turn, reduces the resolution achievable. If the aim is to take photogrammetric images of the entire runway, a resolution of approx. 1.5 to 2 mm can be achieved with today’s technology. For the runway section defined in this project, a resolution of 0.6 mm was achieved.

Conclusions drawn from the drone missions

The results are positive - all of the missions were completed successfully on first attempt and allowed for high-quality data to be generated straightaway, without any gaps in coverage. The comprehensive analysis conducted by IBM Research (see chapter three) shows that the aim of automatically detecting runway defects is achievable even with a slightly reduced resolution of 0.75 mm (M2). This means that the method applied here can already be used today for entire runways or longer road sections. Such missions will need to be planned carefully with the necessary know-how, and carried out with high-quality equipment focussing on goals that have been accurately defined by the operator. As drone technology continues to move forward, more advanced requirements such as photogrammetric capturing in the sub-millimetre range will soon be possible, with effort and expenditure tending to decrease. Use of drones to inspect infrastructure elements is undoubtedly of major significance.

III. Automated visual inspection with AI foundation models

IBM Research used high-resolution imagery from pixmap’s three drone missions to develop automated AI-based inspection methods.

Advances in deep learning are enabling more and more applications in image recognition that were previously considered impossible. Today’s fast-paced technological developments are driven by the availability of annotated data and specialised hardware such as GPU (graphics processing units), which facilitate the training of AI models. This method falls into the category of machine learning and learns from large amounts of data. For this project, the latest advances in deep learning were used to develop technology that detects small cracks in the infrastructure.

One of the main challenges of the project was that annotated data on civil infrastructure is rarely publicly available and most image data does not reveal any visible defects. Solutions like few-shot learning, transfer learning and self-supervised learning are being explored to overcome this challenge.

IBM Research Zurich brought specialist expertise in the field of automated visual inspection of civil infrastructure to the project, particularly in the domain of concrete bridge pillars. The goal of the technologies used is to reduce the costs of infrastructure maintenance through automated inspections, to facilitate systematic documentation of structures and to conduct risk assessments. Current limitations were also discussed and recommendations for the future given. The appendix of this report gives a detailed description of the methods and technologies behind IBM Research’s visual defect detection.

Data organisation

Pixmap carried out three different collection missions with varying requirements, which was particularly relevant in terms of data volume. This allowed IBM Research to optimise the efficiency and accuracy of the collected image data. Mission 1 (M1) aimed for the highest level of detail accuracy which, however, had a direct impact on the total flight time of the drones, the evaluation time and on the scope of data processing and costs. To address challenges of handling large data volumes and to reduce costs of drone inspections utilising AI models, the requirements were relaxed in M2, with a target GSD (ground sampling distance) established at 0.75 mm/pixel, representing a threefold relaxation compared to M1. This reduced the data volume by a factor of nine, saving time and storage space during data processing. Additionally, IBM Research observed secondary benefits such as a reduced need for image overlap and acceptance of minor increments in motion blur. As well as further reducing the capture time and data volume, these modifications provided for an efficient and streamlined process while still ensuring sufficiently high data quality for analytical purposes. Under these circumstances, the higher risk of overlooking small defects in M2 was acceptable. Mission 3 (M3) primarily served as a comparison mission with specific overlap requirements and covered less total area. IBM Research analysed the large amount of image data (> 15,000 high-resolution images) from each mission separately, to find the optimal method for organising and processing data as regards detail, accuracy and efficiency.

Image stitching algorithm

The main objective of all three missions was to merge many individual images into a large, detailed and accurate overview image showing the entire section of the runway. The challenge lay in the extreme precision required to detect cracks and defects in detail.

IBM Research developed a special algorithm that operates in multiple phases to merge the images efficiently and accurately. The method focussed on minimising positioning and alignment errors to create a coherent and precise overall image. To effectively process the immense volume of data, the dataset was divided into smaller segments that were processed independently and merged later.

Key aspects of image composition included:

- Extreme accuracy – the GPS system used by pixmap gmbh had an accuracy of up to one centimetre to optimise the positioning of individual images. This corresponds to a much higher level of detail compared to conventional methods, making the image composition very demanding.

- Complex algorithms – the developed algorithm worked in multiple stages to optimise, align and ultimately merge the data into one large image.

- Data management – in order to manage the immense quantity of data, it was broken down into smaller, manageable parts that were later merged together again.

For the sake of processing efficiency, methods were implemented to save and reuse already processed data, thus saving valuable computing time.

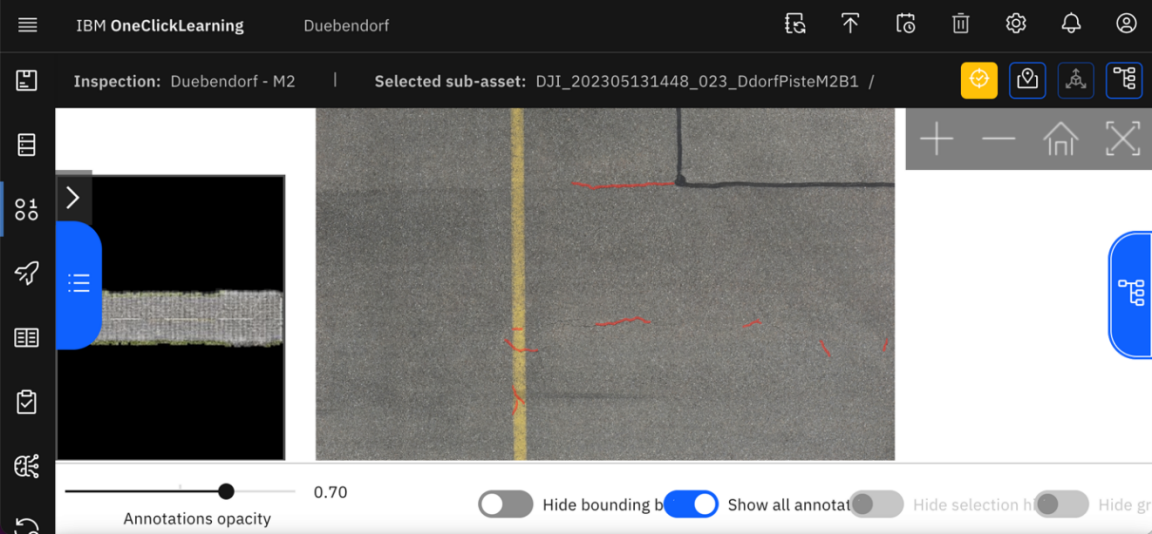

AI foundation model technology for reliable crack detection

IBM Research’s foundation model developed for crack detection is based on an automated visual inspection data model obtained from a broad range of civil infrastructures. The AI uses vision transformers and self-supervised learning. It goes through several stages: initially, it is trained on general images, then on already available images of civil infrastructures such as bridges, to subsequently be refined for the specific task of detecting cracks. This model was applied to the images from missions M1 and M2 and was able to deliver reliable results, despite noisy detections at the edges between the kerb and the actual runway surface. The automated detection of cracks in images is reliable with this model. The data from M2 proved sufficient for the analysis and detection of relevant cracks and offered a good balance of quality and effort, making it feasible to capture the entire runway in half a day.

Overview of number of detected crack instances for missions M1 and M2

| Mission | M1 | M2 | M2 vs. M1 |

|---|---|---|---|

| Total crack instances | 3920 | 2629 | 32,9% less |

| Confidence (>0,5) | 691 | 586 | 15,2% less |

The AI model was run in a sensitive mode, thus detecting many cracks. The numbers shown in the second line of the table are lower as only crack instances with a confidence of at least 0.5 were considered. The difference between M1 and M2 is proportionally much smaller here, which means that the model delivers good results even under more challenging conditions.

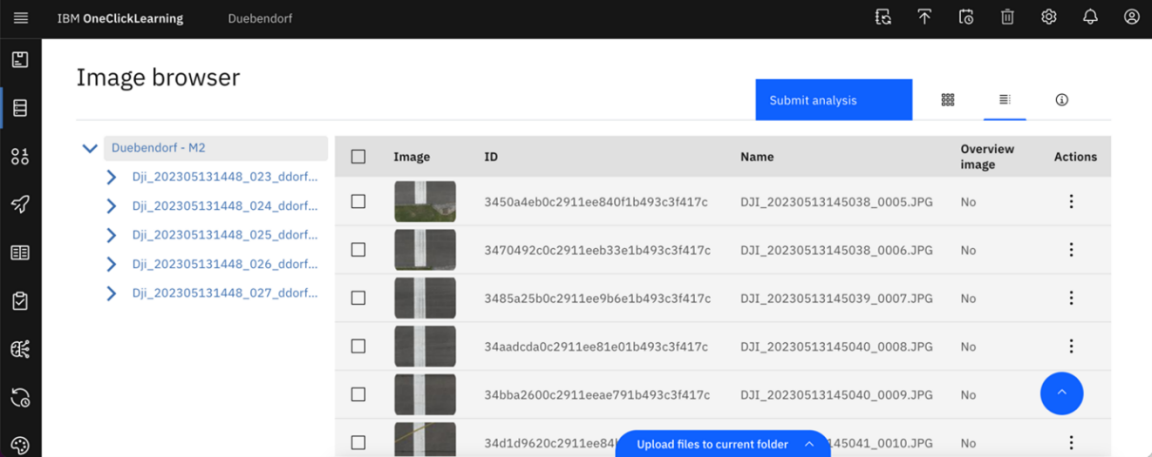

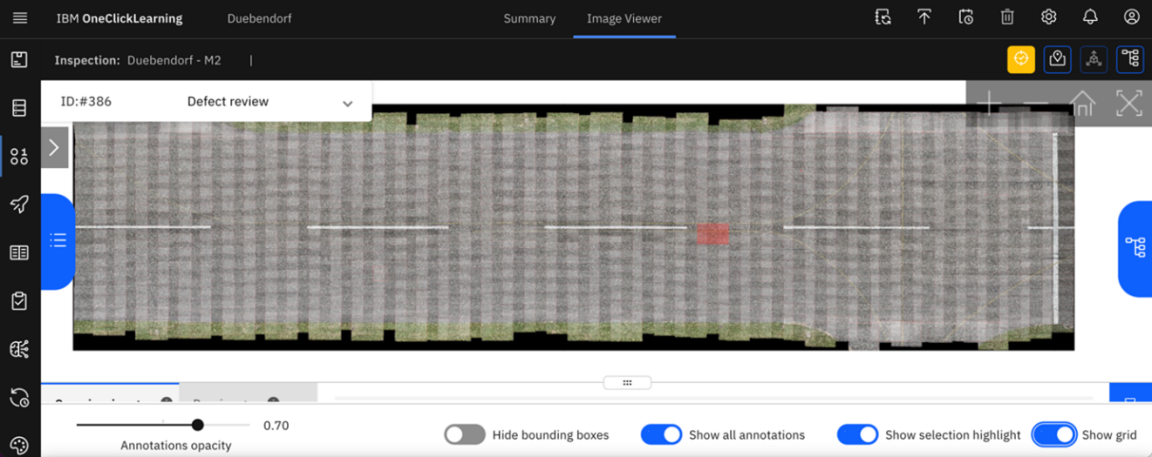

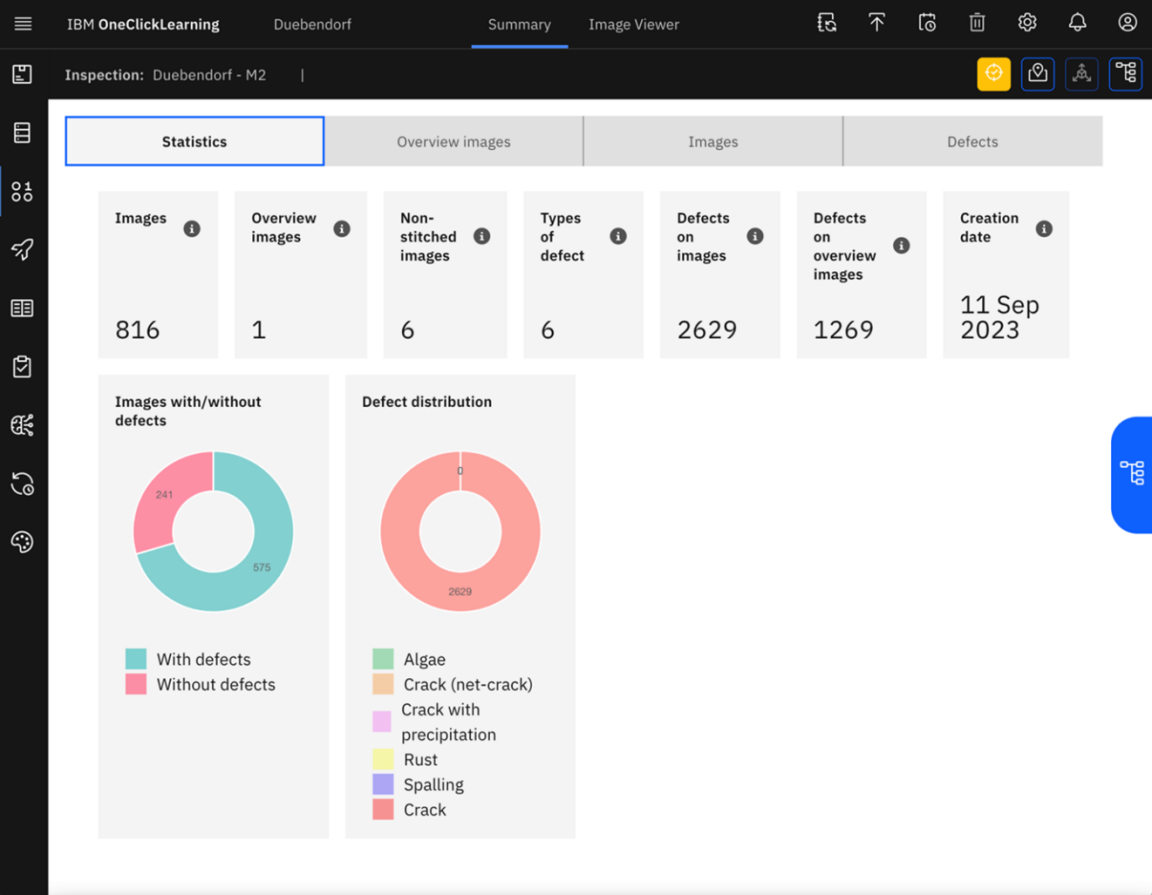

IBM OCL tool for presenting results

The IBM One-Click-Learning (OCL) platform is a research instrument developed to present and display AI results in a comprehensive manner. It is used to visually represent, generate, demonstrate and iterate AI results. In this project, the image and prediction viewers were used to display cracks and defects on the runway in Dubendorf.

Features and views of the OCL tool:

- Image viewer: Allows access to and navigation of all provided images (> 15,000) in structured folders, focussing on the three different missions and image qualities.

- Prediction viewer: Displays the results of the AI models and automatically extracts associated attributes such as crack length to represent pixel-accurate segmentation masks of defects in the runway.

- Overview viewer: Allows the user to view large sections of the runway and navigate in real time. It also enables understanding of the context in which a defect was detected.

- Merged predictions and overview: Enables the user to understand the relationships and positions of all defects and consolidates multiple detections of a defect in different images into a single prediction.

- Statistical viewer: Aggregated statistics are provided on all detected defects, both for individual images and for the entire merged overview.

- Reporting functionality: Reports can be created for all data captured in the tool, with detailed views of each defect, important attributes and direct links to OCL for an enlarged view of the visualised defect. Georeferencing allows runway maintenance staff to manually check defects on the runway and repair damages with a sealant.

In the project, OCL was primarily used to demonstrate and verify results, particularly in the context of missions M1 and M2, to facilitate the analysis and interpretation of large volumes of data. IBM Research provided a comprehensive report and specific crack detections for the M2 data. This is especially relevant for the use of the project results by the competent infrastructure operators.

IV. Potential of AI in infrastructure maintenance

The Innovation Sandbox project »Automated Infrastructure Maintenance - Drone Inspections with Computer Vision” has demonstrated successfully that large volumes of data can be collected systematically and evaluated by AI models. Cracks were identified correctly in all three missions (M1, M2 and M3). In practice, to ensure that large areas can be scanned within a reasonable time, the collection process must be carried out efficiently. IBM Research has shown that M1 and M2 are superior to M3. Furthermore, one of the key findings states that the resolution of M2 is sufficient to detect the relevant cracks, to provide full documentation on them and to make sound decisions with respect to the overall condition of the infrastructure. The entire runway can be captured by a drone in half a day which, in practice, allows for continual inspecting (e.g. semi-annually in spring and autumn, to capture seasonal differences on a continuous basis).

Access to high-resolution image data of airport runways like the one in Dubendorf is usually difficult to obtain. Being able to use real-world data within the scope of the Innovation Sandbox for AI is, therefore, of great significance for the Zurich Metropolitan Area as a location for research and innovation. Institutions like IBM Research are thus given the opportunity to evaluate and improve the latest AI algorithms and strategies in a relevant context. Therefore, this type of data will also contribute to advancing future developments of AI technology in the domain of automated image recognition in the years to come.

Furthermore, every project in which AI applications are successfully used adds to the assurance that the developed foundation models –in this case by IBM Research– operate reliably in a broad context. This means that this type of image recognition can also be used for inspecting facades of large buildings, bridges, dams, tunnels or road surfaces.

Confirmation of added value of automated runway inspections

This project has confirmed that image recognition offers great potential for the automated inspection of infrastructure elements. Even if the AI application is not yet in operational use, it can be assumed that it will be possible to address the four core challenges of manual inspection mentioned in chapter one.

Greater efficiency

The project has shown that use of drones to collect imagery saves time. Furthermore, greater efficiency can be achieved through use of image recognition, compared to traditional inspection methods performed by ground staff without AI-based reporting. Even when AI technologies are in use, an expert will, in most cases, need to conduct a final on-site validation and evaluation of any detected defects. However, the ground staff can carry out the inspection with the aid of an existing decision-making basis. Ideally, the reports with the largest defects would be forwarded directly to the maintenance company responsible for repairing the damage. This would optimise and expedite the entire process.

Better documentation

The opportunity to create digital images of infrastructure elements and to continuously check them offers considerable added value. At present, complete, objective and uniform documentation is, in practice, often lacking. Digital imagery is especially useful for detailed and systematic checking. It promotes quality assurance given that existing cracks and already repaired defects can be monitored accurately and over a longer period of time.

Fewer sources of human error

Use of automated systems helps to minimise human errors which often occur due to differing and inconsistent assessments by experts. Especially in the event of changes of staff or short-handedness, traditional inspection methods may lead to varying evaluations. Automation of inspections through AI allows for a consistent and objective analysis and evaluation of infrastructure elements, thus rendering the results more reliable and comprehensible.

Greater safety

In dangerous environments, such as dams or bridges, drones can be used to perform inspections in order to minimise the risks for humans. Although this aspect was not the focus of the current project, it is an important aspect, particularly considering that inspectors have to expose themselves to potential hazards in such environments. Through use of image recognition technology in such environments the safety aspect can be increased while at the same time providing a detailed and precise analysis of the respective structures.

Outlook

The objective of the Innovation Sandbox for AI is to strengthen the innovation ecosystem of the Zurich Metropolitan Area. The project at hand is contributing to this objective. However, widespread use of image recognition in infrastructure maintenance is still a distant prospect. Therefore, to make even better future use of the potential of Zurich as a location for innovation, the project team proposes the following action points:

- Integration of automated inspection in existing processes

With a view to maximising the added value of automated inspections, it is important to integrate these technologies seamlessly into existing processes of infrastructure operators. This entails, inter alia, the development of interfaces in order to interlink various applications and to provide the results in a format that allows for further processing by infrastructure operators. Especially during site inspections on foot, the maintenance staff need access to a digital model of the runway with GPS function in order to find and verify the cracks identified through automated crack detection on site. Furthermore, best practices should be made available across organisational boundaries so as to share knowledge and experience effectively and to thus facilitate the introduction and use of automated inspection technologies. - New open data approaches within the innovation ecosystem

In order to strengthen the innovation ecosystem in the Zurich Metropolitan Area, it is essential for new open data approaches to be developed. To that end, more data based on specific use cases from industry, research and public administration should be made available. This will enable other stakeholders within the innovation ecosystem to implement similar projects. The provision and use of large volumes of data from airport runways, bridges and dams will allow for innovative solutions to be developed and the potential of AI-based inspections made better use of. - Transferability to other infrastructure elements

Transferability of the tested methods and technologies to other infrastructure elements such as bridges, roads and dams needs to be explored. Every use case comes with specific opportunities and challenges, which is why interdisciplinary dialogue and collaborative thinking across various infrastructure categories is important. Such dialogue will promote the development of adapted solutions for diverse infrastructure elements, thus enabling broad use of innovative image recognition technologies in infrastructure maintenance. - Proactive handling of regulatory questions

Overcoming regulatory obstacles is crucial for implementing AI-based inspections with drones. Important measures in this regard include setting up test environments for experimental applications. In-depth conversations with regulators, including the Federal Office of Civil Aviation (FOCA), and industry associations (e.g. Drone Industry Association Switzerland) are important too, in order to define requirements early on, adapt laws in a future-focussed manner and to speed up certification processes. These strategies can help to reduce innovation barriers and promote the introduction of new inspection technologies in the Zurich Metropolitan Area as well as Switzerland-wide. - Strengthening social acceptance of drones

A key factor for the successful implementation of AI-based drone technology for infrastructure maintenance is the strengthening of social acceptance of drones. It is important to have an open and inclusive dialogue with the general public, so as to communicate opportunities and risks transparently, and to clarify misunderstandings. Information events, workshops and educational initiatives could help increase knowledge about drone technology. Furthermore, meeting zones and experience parks could be set up where people are given the opportunity to come up close with drones, experience their features and applications and, by so doing, gain a better understanding of drone technology. These measures could contribute to reducing prejudices and be conducive to promoting trust in drone technology, which, in turn, will support the implementation of innovative inspection technologies.

Case study

A project of IBM Research Zurich served as a case study within the Innovation Sandbox for AI. The said organisation submitted a project proposal in spring 2022. IBM Research Zurich based in Rüschlikon is IBM’s European research centre and leading institute in various fields including Information Technology, Cloud and AI. The content of this report was created between June and October 2023 based on the use case «Automated Infrastructure Maintenance - Drone Inspections with Computer Vision».

ch.zh.web.internet.core.components.feedback.pleasegivefeedback

Is this page comprehensible?

Thank you for your feedback

Contact

Amt für Wirtschaft - Standortförderung

8090 Zürich

Montag bis Freitag

8.00 bis 12.00 Uhr und

13.30 bis 17.00 Uhr